On Workforce Transformation, Generative AI, Centaurs & Cyborgs

Posted: September 28th, 2023 | Author: Domingo | Filed under: Uncategorized | Tags: AI, GenAI, GenerativeAI, Workforce Transformation | Comments Off on On Workforce Transformation, Generative AI, Centaurs & CyborgsMuch has been stated about the impact of Generative AI on the workforce for the past months, and many more pages will be written in the coming ones.

Last September, 15th 2023, appeared the paper “Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on KnowledgeWorker Productivity and Quality” by, amongst others, Harvard Business School and MIT Sloan School of Management. Some months in advance -in June- McKinsey & Company published a meaningful report titled “The Economic Potential of Generative AI”

Some of the thoughts exposed in them are worthy to be pondered.

The paper by Harvard Business School and MIT Sloan School of Management examined the performance implications of AI on complex knowledge-intensive tasks, using controlled field experiments with highly skilled professionals. The experiments involved two sets of tasks: one inside and one outside the potential technological frontier of GPT-4, OpenAI large language model. The participants were randomly assigned to one of three experimental conditions: no AI, AI only, or AI plus overview. The “overview condition” provided participants with additional materials that explained the strengths and limitations of GPT-4, as well as effective usage strategies.

Understanding the implications of LLMs for the work of organizations and individuals has taken on urgency among scholars, workers, companies, and even governments. Outside of their technical differences from previous forms of machine learning, there are three aspects of LLMs that suggest they will have a much more rapid, and widespread impact on work.

- The first is that LLMs have surprising capabilities that they were not specifically created to have, and ones that are growing rapidly over time as model size and quality improve. Trained as general models, LLMs nonetheless demonstrate specialist knowledge and abilities as part of their training process and during normal use.

- The general ability of LLMs to solve domain-specific problems leads to the second differentiating factor of LLMs compared to previous approaches to AI: their ability to directly increase the performance of workers who use these systems, without the need for substantial organizational or technological investment. Early studies of the new generation of LLMs suggest direct performance increases from using AI, especially for writing tasks and programming, as well as for ideation and creative work. As a result, the effects of AI are expected to be higher on the most creative, highly paid, and highly educated workers.

- The final relevant characteristic of LLMs is their relative opacity. The advantages of AI, while substantial, are similarly unclear to users. It performs well at some jobs, and fails in other circumstances in ways that are difficult to predict in advance.

Taken together, these three factors suggest that currently the value and downsides of AI may be difficult for workers and organizations to grasp. Some unexpected tasks (like idea generation) are easy for AI, while other tasks that seem to be easy for machines to do (like basic math) are challenges for some LLMs. This creates an uneven blurred frontier, in which tasks that appear to be of similar difficulty may either be performed better or worse by humans using AI.

The future of understanding how AI impacts work involves understanding how human interaction with AI changes depending on where tasks are placed on this frontier, and how the frontier will change over time. The current generation of LLMs are highly capable of causing significant increases in quality and productivity, or even completely automating some tasks, but the actual tasks that AI can do are surprising and not immediately obvious to individuals or even to producers of LLMs themselves, because this frontier is expanding and changing. According to the research performed, on tasks within the frontier AI significantly improved human performance. Outside of it, humans relied too much on the AI and were more likely to make mistakes. Not all users navigated the uneven frontier with equal adeptness. Understanding the characteristics and behaviors of these participants may prove important, as organizations think about ways to identify and develop talent for effective collaboration with AI tools.

Two predominant models that encapsulate human approach to AI could be identified, according to the Harvard and MIT research. The first is Centaur behavior: this approach involves a similar strategic division of labor between humans and machines closely fused together. Users with this strategy switch between AI and human tasks, allocating responsibilities based on the strengths and capabilities of each entity. They discern which tasks are best suited for human intervention and which can be efficiently managed by AI. The second approach is Cyborg behavior. In this stance users don’t just delegate tasks; they intertwine their efforts with AI at the very frontier of capabilities.

Upon identifying tasks outside the frontier, performance decreases were observed as a result of AI. Professionals who had a negative performance when using AI tended to blindly adopt its output and interrogate it less. Navigating the frontier requires expertise, which will need to be built through formal education, on-the-job training, and employee-driven up-skilling. Moreover, the optimal AI strategy might vary based on a company’s production function. While some organizations might prioritize consistently high average outputs, others might value maximum exploration and innovation.

Overall, AI seems poised to significantly impact human cognition and problem-solving ability. Similarly to how the internet and web browsers dramatically reduced the marginal cost of information sharing, AI may also be lowering the costs associated with human thinking and reasoning.

The era of generative AI is just beginning. Excitement over this technology is palpable, and earlypilots are compelling. It is extremely complex to forecast the adoption curves of this technology. According to historical findings, technologies take 8 to 27 years from commercial availability to reach a plateau in adoption. Some argue that the adoption of generative AI will be faster due to the ease of deployment. For McKinsey, it will be needed a minimum of eight years -in its earliest scenario- for reaching a global plateau in adoption.

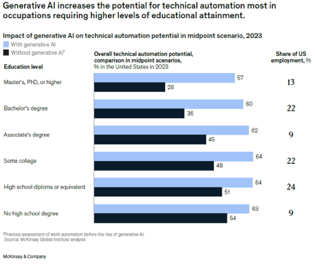

One final note about the labor impact of GenAI: economists have often noted that the deployment of automation technologies tends to have the most impact on workers with the lowest skill levels -as measured by educational attainment-, or what is called skill biased. From the McKinsey analysis, we can reach the conclusion that generative AI has the opposite pattern—it is likely to have the most incremental impact through automating some of the activities of more-educated workers:

Notwithstanding, a full realization of the technology’s benefits will take time, and leaders in business and society still have considerable challenges to address. These include managing the risks inherent in generativeAI, determining what new skills and capabilities the workforce will need, and rethinking core business processes such as retraining and developing new skills.