Posted: April 4th, 2016 | Author: Domingo | Filed under: Artificial Intelligence | Tags: Dox, Engineered bacteria, FRET, Nanomedicine, Nanoporphyrin, Synthetic biology | Comments Off on Synthetic Biology and Nanomedicine: Two AI Approaches for Removing Tumor Cells

Resuming the topic of my post Multi-agent AI Nanorobots against Tumors, this time I’m going to explain two different AI approaches regarding the removal of tumor cells: firstly a synthetic biological approach in which E.coli bacteria are modified genetically in order to locate and eliminate tumor cells; and secondly a nanomedicine approach in which a polymer-based platform is used to remove carcinogenic cells.

Leer más »

Posted: March 17th, 2016 | Author: Domingo | Filed under: Artificial Intelligence | Tags: Eugene, Hector Levesque, natural language processing, NLP, Terry Winograd, The Imitation Game, Turing Test, Winograd Schema Challenge | Comments Off on Winograd Schema Challenge: A Step beyond the Turing Test

The well-known Turing test was first proposed by Alan Turing (1950) as a practical way to defuse what seemed to him to be a pointless argument about whether or not machines could think. He put forward that, instead of formulating such a vague question, we should ask whether a machine would be capable of producing behavior that we would say required thought in people. The sort of behavior he had in mind was participating in a natural conversation in English over a teletype in what he called the Imitation Game. The idea, roughly, was the following: if an interrogator was unable to tell after a long, free flowing and unrestricted conversation with a machine whether s/he was dealing with a person or a machine, then we should be prepared to say that the machine was thinking. The Turing test does have some troubling aspects though.

Leer más »

Posted: February 22nd, 2016 | Author: Domingo | Filed under: Artificial Intelligence | Tags: AI, Artficial Intelligence, Multi-agent Systems, Nanocolony, Nanotechnology | Comments Off on Multi-agent AI Nanorobots against Tumors

Cancer is probably one of the biggest challenges medicine is facing nowadays. For the last decades the use of radiotherapy and chemotherapy has been the optimal tool to eradicate the malign tumors our bodies wrongly develop. Nonetheless, with the amazing evolution of nanotechnology and Artificial Intelligence, new lines of research have been launched regarding the cancer treatment and cure.

In this post I explain the implementation I performed of the IA researchers M. A. Lewis and G. A. Bekey’s scientific article –The Behavorial Self-Organization of Nanorobots Using Local Rules. Institute of Robotics and Intelligent Systems. University of Southern California. July 7th, 1992-, related to the use of multi-agent AI nanorobots to cope with tumor removing.

Multi-Agent systems involve a number of heterogeneous resources/units, working collaboratively towards solving a common problem, despite the fact that each individual might have partial information about the problem and limited capabilities. In parallel, nanotechnology focuses on manipulating matter with dimensions similar to the ones of biological molecules. Current advances in the domain have been receiving much attention from both the academia and the industry, largely due to the fact that nanostructures exhibit unique properties and characteristics. A plethora of applications in a wide range of fields are currently available; however, what appears to be amongst the most promising endeavors is the development of nanotechnological constructs targeted for medical use. Nanoparticles suitable for medicine purposes, such as dendrimers, nanocrystals, polymeric micelles, lipid nanoparticles and liposomes are already being manufactured. Those nanostructures exploit their inherent biological characteristics, and are based on molecular and chemical interactions to achieve the specified target.

Regarding the simulation I developed:

1.- The target of the colony of nanorobots -injected in a human body- was the removal of malignant brain tissue. The tumor was assumed to be relatively compact.

2.- The nanorobots communicated to each other and, in addition to this communication mechanism, each of them had the capacity to unmask the tumor: they were assumed to have a “tumor” detector which could differentiate between cells to be attacked and healthy cells. They should wander randomly until encounter the tumor and then act. Eventually a nanorobot could only detect a tumor if it landed directly on a square containing a tumor element. The grid on which the nanorobots moved was considered a closed world.

3.- The simulation was implemented using NetLogo -Uri Wilensky, 1999: a programmable modeling environment for simulating natural and social phenomena.

The initial scenario encompassed a group of x nanorobots, injected inside a human body, with a certain value regarding their energy threshold; i.e., the amount of energy a nanorobot enjoyed before committing to its tasks of attacking and destroying a tumor. When a nanorobot’s energy threshold equaled to or was less than a certain amount, it biodegraded itself and disappeared.

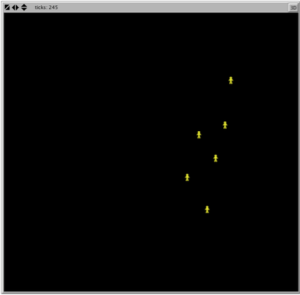

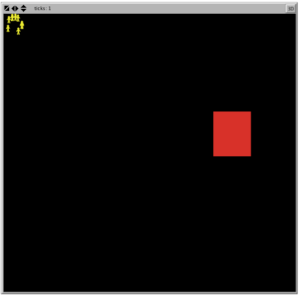

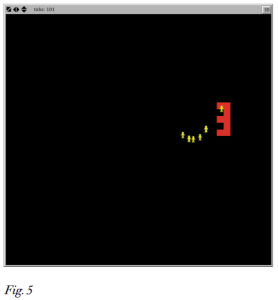

As it can be noticed in the screenshot below attached, besides the nanorobots in the grid there was a red square which symbolized the tumor:

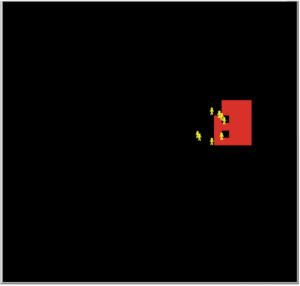

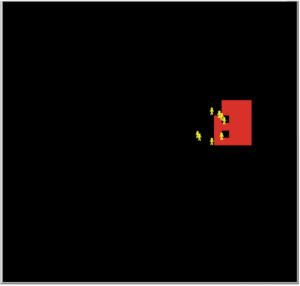

The process was the following: upon launching the model simulation, the nanorobots began wandering around looking for the tumor. As soon as one of them discovered the tumor, it communicated the news to the rest, the exact location, and all of them gathered in front of the tumor:

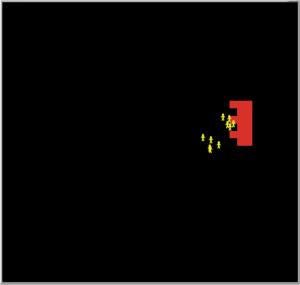

And they started attacking and destroying it:

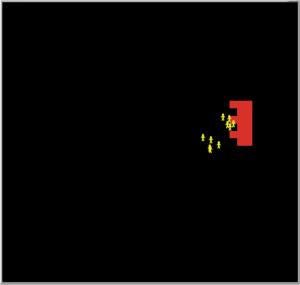

Every single time one of the nanorobots managed to destroy a piece of the tumor, all of them gathered again and they began their attack from their initial point since they considered once the tumor membrane was broken at that point, that was the weakest and easiest gap to get in and to keep on their labor of destroying the cancer:

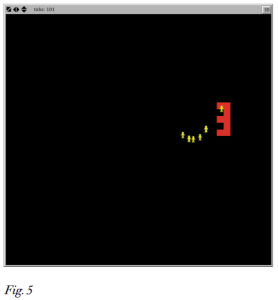

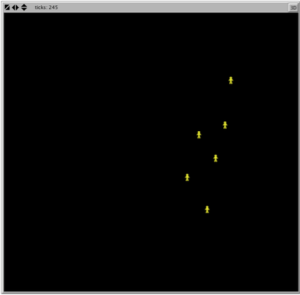

Unlike Lewis and Bekey’s model, in the simulation I carried out, at the end of their task, nanorobots did exhibit an artificial intelligent behavior; i.e., those nanorobots, which had been more exposed to the tumor because they had been more active fighting and destroying it, biodegraded themselves as not only their exposition to a malign tissue had been higher than the rest, but also their level of energy after the effort had diminished more and, in the event that a new tumor might appear, they would not have been adequate individuals to perform the required fight.

In the screenshot below six nanorobots remained in the area, just monitoring the likely appearance and growth of new tumors. They could linger in the human body since their composition and structure would be fully compatible with the human nature:

With respect to the future work in this line of research, from my point of view the most important challenge regarding the use of nanorobots to cure cancer is: how could nanorobots fight and succeed when there is metastasis in a patient with cancer? In the simulation I developed the tumor was perfectly defined and static but many times this is not the case.

As a conclusion, a last thought with respect to this kind of multi-agent AI simulation exercise: due to the very nature of medical research, which requires lengthy periods of time and adherence to strict protocols before any new developments are encompassed, computer simulations can offer a great advantage towards accelerating both the basic and applied research processes to the cure of serious diseases or biological anomalies such as cancer.

Leer más »

Posted: January 28th, 2016 | Author: Domingo | Filed under: Artificial Intelligence | Tags: Adaptive neuro-fuzzy inference systems, ANFISs, ANN, artificial intelligence, FISs, Fuzzy inference systems, Fuzzy Logic, High Frequency Trading algorithms, Intelligence Trading System, Moving Average Convergence/Divergence, Neural network architectures, Neurofuzzy System, Relative Strength Index, Stock Quantity Selection Component | 2 Comentarios »

Some of you might be familiar with the term high-frequency trading (HFT). As Daniel Lacalle explained in his book Life in the Financial Markets, HFT is a type of trading in which millions of operations are performed through algorithms in milliseconds. Nowadays around 30% of the stock exchange transactions are carried out by the high-frequency trading (HFT) algorithms.

Following the line exposed by Lacalle in this book, in this post I’m going to write about two distinct approaches to stock exchange trading based on artificial intelligence; namely, the Intelligence Trading System and the Stock Quantity Selection Component.

Leer más »

Posted: October 28th, 2015 | Author: Domingo | Filed under: Artificial Intelligence | Tags: Baroni, Bernardi, Chomsky, Erik T. Mueller, Georgetown-IBM experiment, IBM Watson, natural language processing, NLP, Zamparelli | Comments Off on Once upon a Time in 1954…

Die Grenzen meiner Sprache bedeuten die Grenzen meiner Welt

(The limits of my language are the limits of my world)

Ludwig Wittgenstein, Tractatus Logico-Philosophicus

… In a cold day of January it took place in Washington DC the Georgetown-IBM experiment, the first and most influential demonstration of automatic translation performed throughout the history. Developed jointly by the University of Georgetown and IBM, the experiment implied the automatic translation of more than 60 sentences from Russian into English. The sentences were chosen precisely; there was no syntactic analysis, which could manage to identify the sentence structure. The approach was mainly lexicographic, based on dictionaries in which a certain word had a link to some particular rules.

That episode was a success. Story has it that the level of euphoria amongst the researchers was such that it was stated that within three or five years the problem of the automatic translation would be solved… That was more than 60 years ago and the language problem –the comprehension and generation of messages by the machine- is still pending. Probably this is the last frontier which separates the human intelligence from the artificial intelligence.

Leer más »