On Graphs of Thoughts (GoT), Prompt Engineering, and Large Language Models

Posted: November 5th, 2023 | Author: Domingo | Filed under: Artificial Intelligence | Tags: AI, artificial intelligence, Large Language Models, LLMs, Ontology engineering | Comments Off on On Graphs of Thoughts (GoT), Prompt Engineering, and Large Language ModelsFor much time it seemed that in the computing landscape the main application of graphs were only related to ontology engineering, so when my colleague Mihael shared with me the paper “Graph of Thoughts: Solving Elaborate Problems with Large Language Models” -published by the end of August-, I thought we might be in the right path to re-discover the power to representing knowledge of these structures. In the afore-mentioned paper, the authors harness the graph abstraction as a key mechanism that enhances prompting capabilities in LLMs.

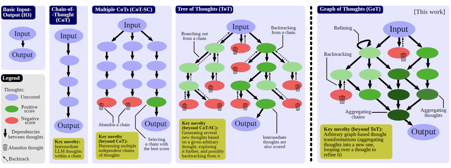

Prompt engineering is one of the central new domains of the large language model research. However, designing effective prompts is a challenging task. Graph of Thoughts (GoT) is a new paradigm that enables the LLM to solve different tasks effectively without any model updates.The key idea is to model the LLM reasoning as a graph, where thoughts are vertices and dependencies between thoughts are edges.

Human’s task solving is often non-linear, and it involves combining intermediate solutions into final ones, or changing the flow of reasoning upon discovering new in sights. For example, a person could explore a certain chain of reasoning, backtrack and start a new one, then realize that a certain idea from the previous chain could be combined with the currently explored one, and merge them both into a new solution, taking advantage of their strengths and eliminating their weaknesses. GoT reflects this, so to say, anarchic reason process with its graph structure.

Nonetheless, let’s take a step back: besides Graph of Thoughts, there are other approaches for prompting:

- Input-Output (IO): a straightforward approach in which we use an LLM to turn an input sequence x into the output y directly, without any intermediate thoughts.

- Chain-of-Thought (CoT): one introduces intermediate thoughts a1, a2,… between x and y. This strategy was shown to significantly enhance various LLM tasks over the plain IO baseline, such as mathematical puzzles or general mathematical reasoning.

- Multiple CoTs: generating several (independent) k CoTs, and returning the one with the best output, according to certain metrics.

- Tree of Thoughts (ToT): it enhances Multiple CoTs by modeling the process of reasoning as a tree of thoughts. A single tree node represents a partial solution. Based on a given node, the thought generator constructs a given number k of new nodes. Then, the state evaluator generates scores for each such new node.

Explained in a more visual way:

The design and implementation of GoT, according to the authors, consists of four main components: the Prompter, the Parser, the Graph Reasoning Schedule (GRS), and the Thought Transformer:

- The Prompter prepares the prompt to be sent to the LLM, using a use-case specific graph encoding.

- The Parser extracts information from the LLM’s thoughts, and updates the graph structure accordingly.

- The GRS specifies the graph decomposition of a given task, i.e., it prescribes the transformations to be applied to LLM thoughts, together with their order and dependencies.

- The Thought Transformer applies the transformations to the graph, such as aggregation, generation, refinement, or backtracking.

Finally, the authors evaluate GoT on four use cases -sorting, keyword counting, set operations, and document merging-, and compare it to other prompting schemes in terms of quality, cost, latency, and volume. The authors show that GoT outperforms other schemes, especially for tasks that can be naturally decomposed into smaller subtasks, are solved individually, and then merged for a final solution.

Summing up, another breath of fresh air in this hecticly evolving world of AI; this time combining abstract reasoning, linguistics, and computer sciences. Pas mal at all.